Earlier I wrote about how we created and showed at MBFW a collection of 3D products with patterns that were generated by a neural network, as well as about our experience with real knitting production. Now I would like to tell you about the project that we conducted from beginning to end at all stages with the elaboration of the smallest nuances.

We live in a three-dimensional world and an ordinary 2D sketch cannot fully convey the author's ideas. Sometimes the designer himself does not fully understand how his creation will look live. Designing products in 3D space helps to understand all the proportions and build patterns (or modify already standard ones) immediately on the spot. Thus, the process of creating a thing is shortened. There is no need to make 3 samples of products anymore. The customer understands what will happen and can immediately see all the color variations. Together with the local Rosee Knitwear Factory, we have released an experimental drop of knitted accessories (hats, scarves, balaclavas).

https://www.instagram.com/p/CHpzUh3KPXt/

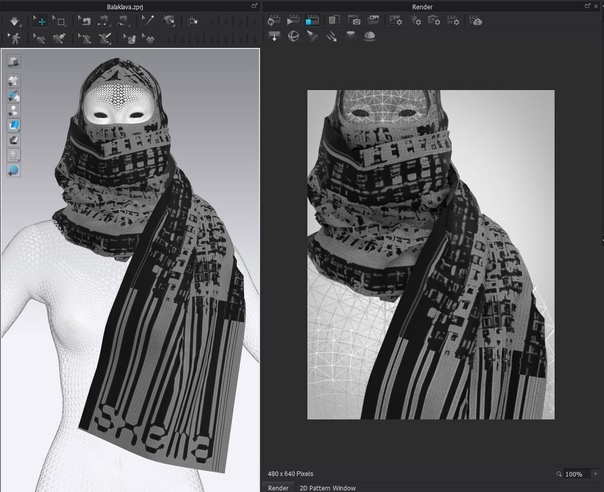

We started by building a 3D visualization of the models and exporting the patterns. Comparing the actual size in centimeters in pixels, we got the number of nodules in each product. Our neural network generated images and then we selected those images that fit the concept, specifically degrading their quality so that one pixel was equal to one node.

Based on the capabilities of the equipment at the factory, we decided to knit samples from 2 colors. Accordingly, we traced the images and split them into color versions. Having created and approved a knitting program, you can combine the colors of bobbins with threads, and get completely different effects. In order to understand whether a particular color combination will "work", it is possible to change colors on an existing 3D model in accordance with the pantone of the existing yarn sample.